Engineers at Duke University have developed a microscope that has taught itself the optimal settings needed to complete a given diagnostic task.

In the initial proof-of-concept study at Duke University, the microscope simultaneously developed a lighting pattern and classification system for quickly diagnosing malaria.

The microscope does this by identifying red blood cells infected by the malaria parasite more accurately than trained physicians and other machine learning approaches.

The Duke University study was published in the journal Biomedical Optics Express.

Diagnosing malaria

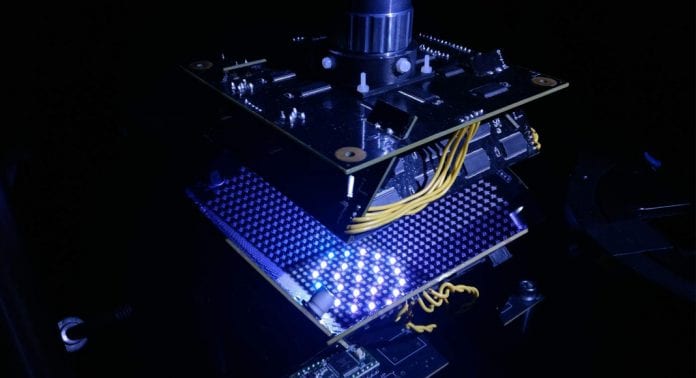

Roarke Horstmeyer, assistant professor of biomedical engineering at Duke, said: “A standard microscope illuminates a sample with the same amount of light coming from all directions, and that lighting has been optimised for human eyes over hundreds of years.

“Computers can see things humans can’t, so not only have we redesigned the hardware to provide a diverse range of lighting options, we’ve allowed the microscope to optimise the illumination for itself.”

Rather than diffusing white light from below to evenly illuminate the slide, the engineers developed a bowl-shaped light source with LEDs embedded throughout its surface which allows samples to be illuminated from different angles up to nearly 90 degrees with different colours. This essentially casts shadows and highlights different features of the sample depending on the pattern of LEDs used.

The researchers then fed the microscope hundreds of samples of malaria-infected red blood cells prepared as thin smears, in which the cell bodies remain whole and are ideally spread out in a single layer on a microscope slide.

Using a type of machine learning algorithm called a ‘convolutional neural network’, the microscope learned which features of the sample were most important for diagnosing malaria and how best to highlight those features.

The results correctly classified about 90% of the time. Trained physicians and other machine learning algorithms typically perform with about 75% accuracy.

The researchers also showed that the microscope works well with thick blood smear preparations, in which the red blood cells form a highly non-uniform background and may be broken apart. For this preparation, the machine learning algorithm was successful 99% of the time.

According to Horstmeyer, the improved accuracy is expected because the tested thick smears were more heavily stained than the thin smears and exhibited higher contrast. However, one problem is that they also take longer to prepare, and part of the motivation behind the project is to cut down on diagnosis times in low-resource settings where trained physicians are sparse, and bottlenecks are the norm.

With this initial success in hand, Horstmeyer is continuing to develop both the microscope and machine learning algorithm.

Horstmeyer, said: “We’re basically trying to impart some brains into the image acquisition process. We want the microscope to use all of its degrees of freedom.

“So instead of just dumbly taking images, it can play around with the focus and illumination to try to get a better idea of what’s on the slide, just like a human would.”